It often happened to me that Facebook stops fetching the URL’s correct preview so in that case by scrapping the URL via its Sharing Debugger, it starts fetching the correct preview. But, what if Facebook not fetch the correct preview of the URLs of your website whereas the Sharing Debugger’s scrape is also not working?

Did it happen to you?

Very first in the four years of running my website and using Facebook, this case happened to me when the preview of my website URLs are not being fetched by Facebook when sharing it on my page or anywhere on Facebook and the bad part of that is the Sharing Debugger was also not scraping the URLs.

Here’s Why Facebook Sharing Debugger Not Scraping Preview Data

In case Facebook’s Sharing Debugger is not able to scrape the URLs of your website, there’s really something wrong with your site.

You need to understand this problem to solve it. Generally, Facebook automatically fetches a preview of website URLs. But, in order to have control over how your content appears on Facebook, you can markup your website with Open Graph tags.

But, in my case, Facebook suddenly stopped crawling my website URLs. As you would know whether it’s Google, Facebook or any other platform, all of them need to crawl your website pages in order to fetch the correct preview of the URLs of your site.

So, the very first thing you need to make sure of is whether the Facebook crawler is able to crawl your website or not.

How will you get to know that?

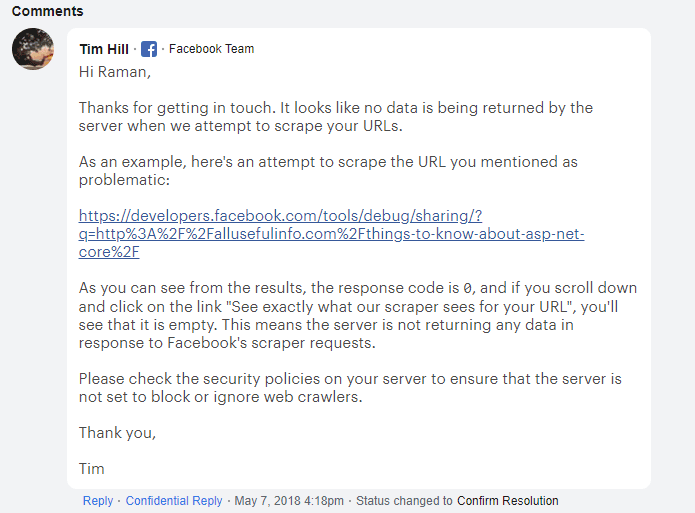

When you’ll share your URL in Facebook Sharing Debugger, you’ll see from the results, the response code is 0, and if you scroll down and click on the link “See exactly what our scraper sees for your URL”, you’ll see that it is empty. This means the server is not returning any data in response to Facebook’s scraper requests.

It simply means the web hosting server you’re using to host your site is somehow blocking or ignoring Facebook web crawlers or blacklisting the IP addresses that Facebook using to crawl your website.

Here’s the Fix! Give the Facebook Crawler Access to Your Site

When this problem started happening to me, I immediately visited Facebook’s developer support and reported a bug. After sometime I got a reply from support team and they told me that my web hosting server is blocking IPs that the Facebook crawler uses to access the website.

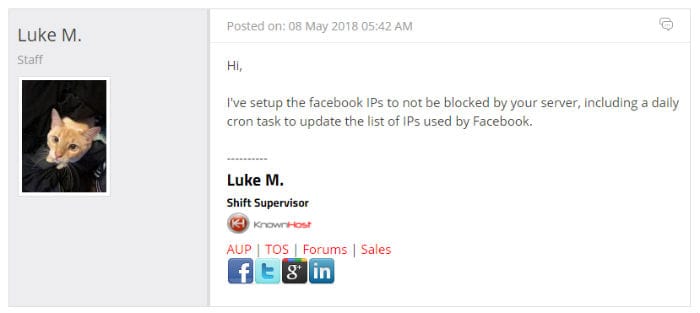

After that, I contacted my web hosting server operator to whitelist all the Facebook crawler IPs. At the time of writing this article, we’re hosted on KnownHost, which is very serious and trustworthy in terms of support. So, I got the reply very quickly asking me crawler IPs that I need to be whitelisted.

I then found this documentation where I got to know the command to find Facebook crawler IPs that change often. So, I shared this documentation to my server’s support desk and they guys immediately setup all the crawler IPs not to be blocked by the server, including a daily cron task to update the list of IPs used by Facebook.

And, amazingly just after the few seconds of whitelisting the crawler IPs, the Facebook sharing debugger again started scraping the URLs and the URLs automatically started fetching the correct previews.